OmniQuery enables answering questions that require multi-hop searching and reasoning over personal memories. Here are some examples of the questions that OmniQuery can answer:

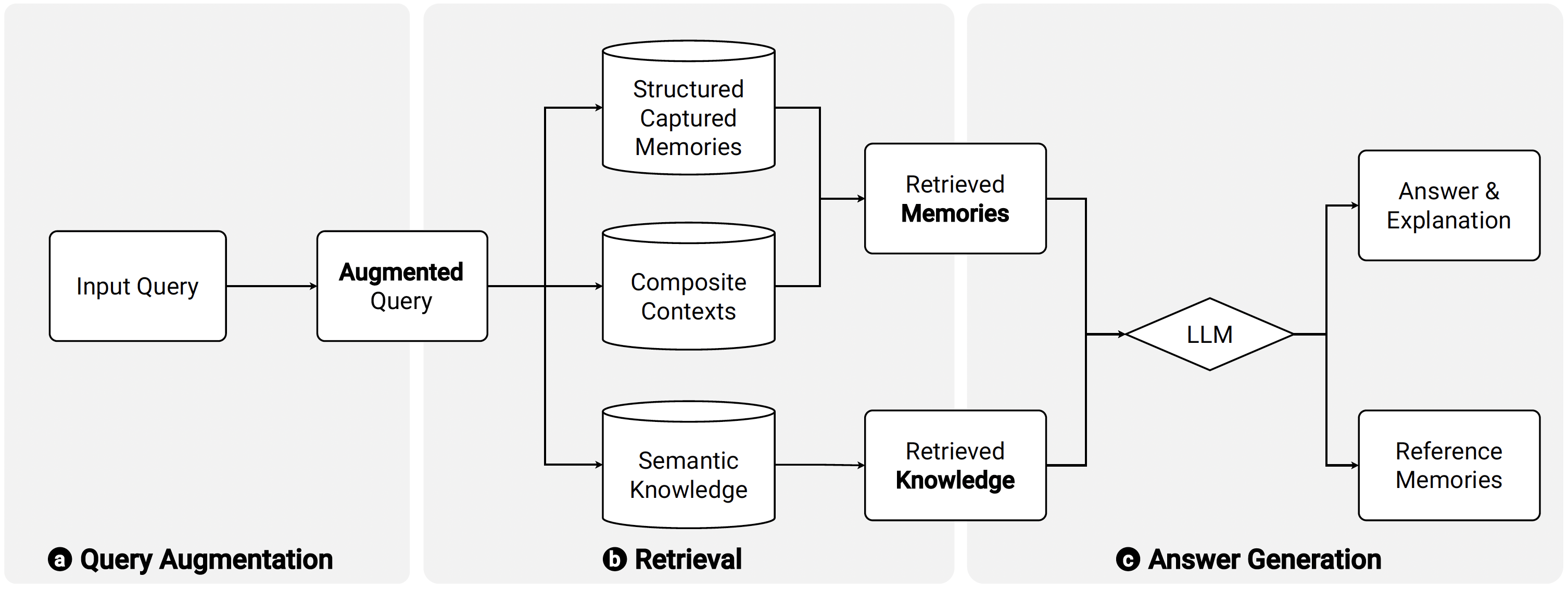

OmniQuery leverages Retrieval-Augmented Generation (RAG) to answer complex personal questions on large amount of personal memory.

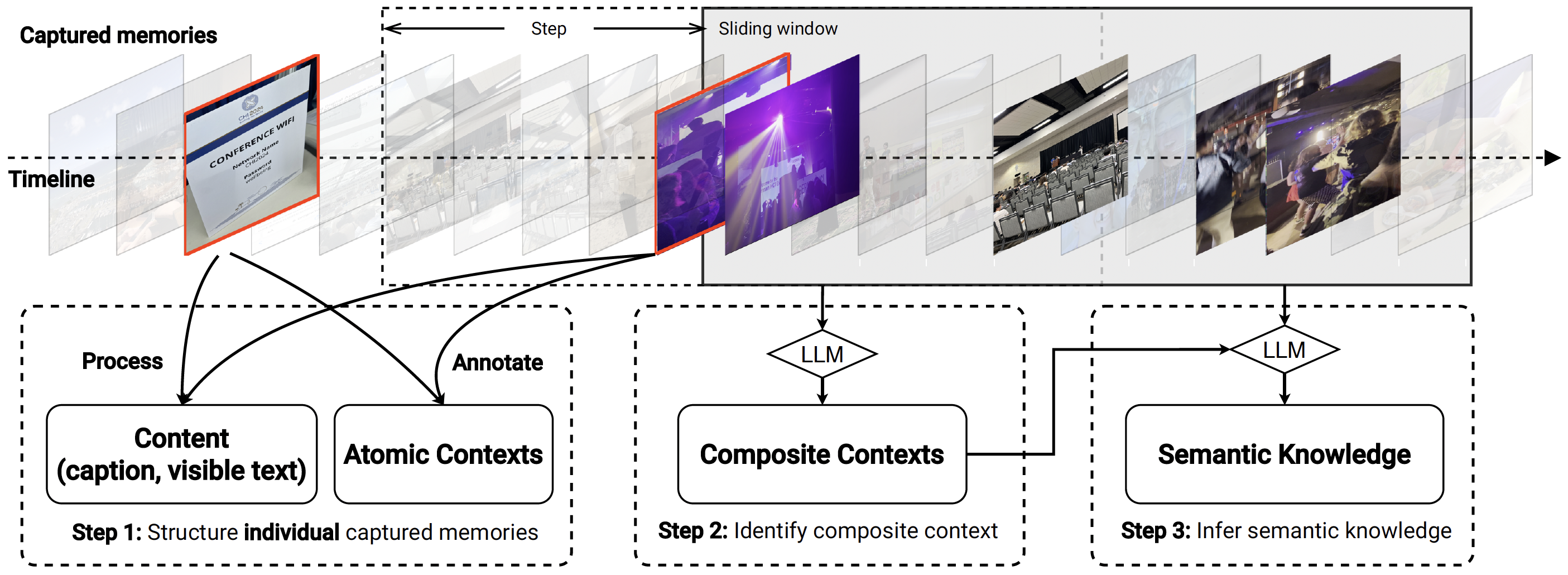

Specifically, the accurate retrieval of relevant memories is crucial for the performance of the system. To this end, we propose a novel taxonomy-based contextual data augmentation method to enhance the retrieval accuracy. The taxonomy is generated based on a one-month diary study, which collects realistic user queries and the necessary contextual information for integrating with captured memories. The taxonomy is then used to augment the captured memories with contextual information, which is used to retrieve relevant memories. The retrieved memories are then used to generate answers to the user queries using a large language model (LLM). For more details, please refer to our paper.

@inproceedings{li2025omniquery,

author = {Li, Jiahao Nick and Zhang, Zhuohao (Jerry) and Ma, Jiaju},

title = {OmniQuery: Contextually Augmenting Captured Multimodal Memories to Enable Personal Question Answering},

year = {2025},

isbn = {9798400713941},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3706598.3713448},

doi = {10.1145/3706598.3713448},

booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems},

articleno = {635},

numpages = {20},

keywords = {personal memory, contextual augmentation, diary study, multimodal question answering, RAG},

location = {

},

series = {CHI '25}

}